Originally published at: Video Encoding: The Big Streaming Technology Guide [2023]

The days of clunky VHS tapes and DVDs have become a distant memory. Thanks to data compression technologies like video encoding, we’re now able to stream high-quality videos to connected devices by simply pressing play.

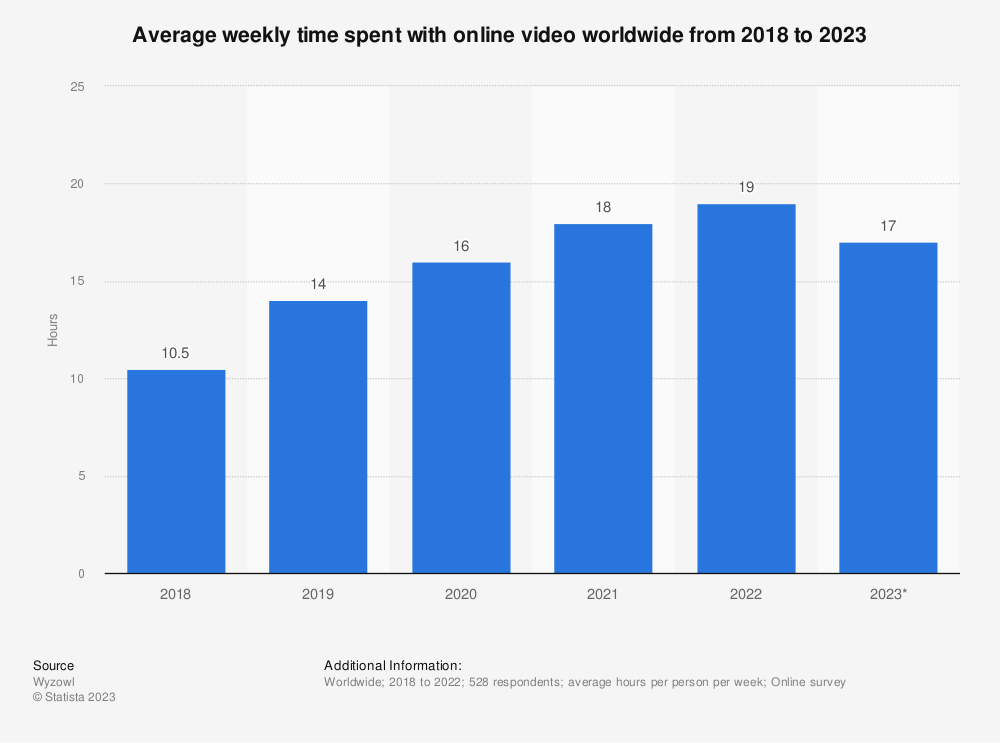

Everything from premium shows and motion pictures to user-generated content (UGC) is delivered via the internet today. Online video consumption has never been higher, with the average viewer spending a whopping 19 hours a week watching digital videos.

The move from analog to digital has fueled this trend, as well as advancements in data compression. Video encoding accomplishes both of these needs, making the distribution of streaming content both efficient for publishers and abundantly available to end users.

Whether it’s a Netflix series, an interactive fitness class, or a Zoom call with coworkers, streaming video is everywhere. But plenty goes on in the background to prepare digital content for multi-device delivery. Encoders play a pivotal role in shrinking down the data of today’s high-definition videos without sacrificing quality.

In this guide, we dive into what video encoding is and how it works. We also explore the differences between hardware vs. software encoding, lossy vs. lossless compression, and encoding vs. transcoding (two terms that are often used interchangeably).

Use our interactive table of contents to navigate to the section of your liking or start reading for the whole picture.

Table of Contents

- What is video encoding?

- What is video compression?

- How is video encoded?

- Hardware vs. software encoding

- What are the most common encoding formats?

- What is transcoding?

- How is video transcoded?

- Where is video transcoding deployed?

- Considerations when architecting your encoding pipeline

- Top encoding software and hardware

- Top transcoding services

- Best video codecs

- Video quality vs. video resolution

- What is adaptive bitrate streaming?

- How to do multi-bitrate video encoding with Bitmovin

- Common video encoding challenges

- Streaming video encoding glossary

- Video encoding FAQs

- Conclusion

What is video encoding?

Video encoding is the process of converting RAW video into a compressed digital format that can be stored, distributed, and decoded. This type of processing is what made it possible to compress video data for storage on DVDs and Blu-ray discs back in the day. And today, it powers online video delivery of every form.

Encoding is essential for streaming. Without it, the video and audio data contained in a single episode of Ted Lasso would be far too bulky for efficient delivery across the internet. Encoding is also what makes today’s videos digital: It transforms analog signals into digital data that can be played back on common viewing devices. These devices — computers, tablets, smartphones, and connected TVs — have built-in decoders that then decompress the data for playback.

That said, you’ve likely streamed before without using a standalone encoding appliance. How could this be? Well, for user-generated content (UGC) and video conferencing workflows, the encoder is similarly built into the mobile app or camera. Embedded encoding solutions like these work just fine for simple broadcasts where the priority is transporting the video from point A to point B.

For more professional broadcasts, though, hardware encoders and computer software like Open Broadcaster Studio (OBS) come into play. Content distributors use these professional encoders to fine-tune their settings, specify which codecs they’d like to use, and take advantage of additional features like video mixing and watermarking.

Encoding always occurs early in the streaming workflow — sometimes as the content is captured. When it comes to live streaming, broadcasters generally encode the stream for transmission via the Real-Time Messaging Protocol (RTMP), Secure Reliable Transport (SRT), or another ingest protocol. The content is then converted into another video format like HTTP Live Streaming (HLS) or Dynamic Adaptive Streaming over HTTP (DASH) using a video transcoding service like Bitmovin.

What is video compression?

Video compression is the piece of video encoding that enables publishers to fit more data into less space. By squeezing as much information as possible into a limited number of bits, compression makes digital videos manageable enough for online distribution and storage.

Imagine you have a hot air balloon that needs to be transported to a different location by ground. The balloon would be too unwieldy to fit anywhere without deflating it. But by removing the air and folding the balloon up into a compact size, it would become significantly smaller and easier to handle.

Video compression works the same way. Specifically, it removes redundant information and unnecessary details for compact transmission. Just as the deflated hot balloon becomes easier to handle, a compressed video file is more suitable for storage, global transmission, and end-user delivery.

Lossless vs. lossy compression

Video compression technology falls into two camps: lossless and lossy encoding. These opposing approaches work just as they sound:

- Lossless compression describes an approach to encoding that shrinks down the file size while maintaining data integrity. With lossless compression, 100% of the original file returns when it’s decoded. ZIP files are a great example of this. They allow you to cram a variety of documents into a compressed format without discarding anything in the process.

- Lossy compression, on the other hand, describes encoding technologies that remove any data deemed unnecessary by the compression algorithms at work. The goal of lossy compression is to throw out as much data as possible while maintaining video quality. This enables a much greater reduction in file size and is always used for streaming video. When you use image formats like JPEG, you’re also using lossy compression. That’s why JPEG image files are easier to share and download than RAW image files.

How is video encoded?

Encoding works by using algorithms to find patterns, reduce redundancy and, in turn, eliminate unnecessary information. Video streaming workflows employ lossless compression to create an approximation of the original content that makes it easy to transmit the data across the internet while maintaining video quality for end users.

This involves three steps:

- Identify patterns that can be leveraged for data reduction.

- Drop all data that will go undetected by the human eye or ear.

- Quickly compress all remaining data.

Accomplishing this requires the help of video and audio codecs. Literally ‘coder-decoder’ or ‘compressor-decompressor,’ codecs are the algorithms that make it all happen. They facilitate both the compression and the decompression that occurs once the video file reaches end-users.

Temporal compression

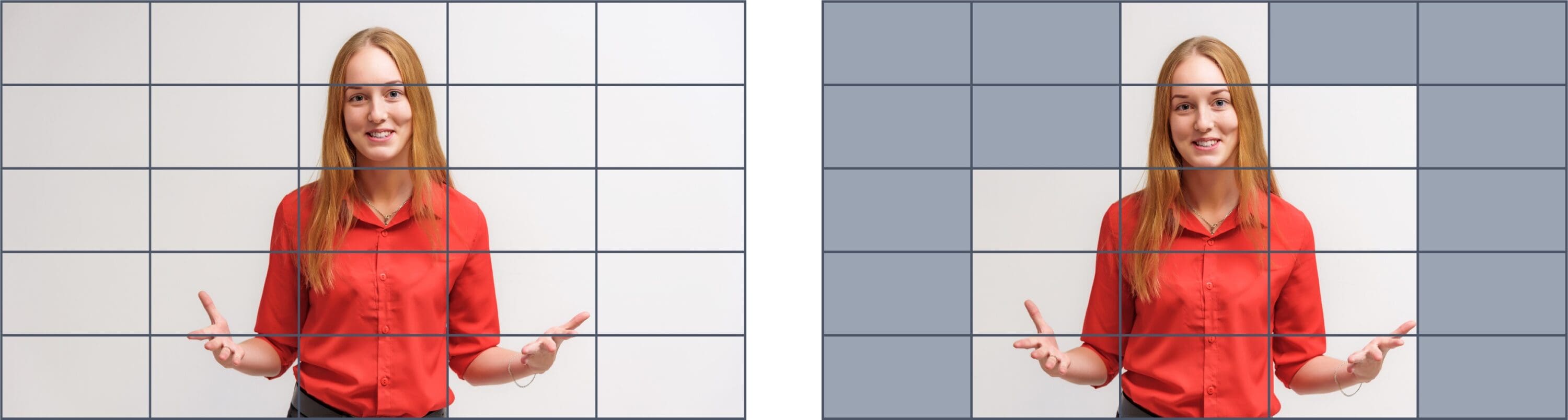

In order to capture the required visual data without going overboard on bitrate, video codecs break up the frames of a video into groupings of a single keyframe followed by several delta frames. The keyframe depicts the entire image of a video, whereas the subsequent delta frames only contain information that has changed. This is called temporal compression.

When a stagnant backdrop appears for the entirety of a talking-head news broadcast, for example, there’s no need to store all of that data in every single frame. Instead, the compression algorithm prunes down any visual data that goes unchanged and only records differences between frames. The stagnant backdrop offers a major opportunity to toss out unnecessary data, whereas the gestures and movements of the reporter standing before the backdrop are captured in the delta frames.

So, while the keyframe of a newscast will always show everything within the frame — including the reporter, their desk, the studio background, and any graphical elements — the delta frames only depict the newscaster’s moving lips and hand gestures. This is called temporal compression because it takes advantage of the fact that large portions of video images often stay similar for some time. In this way, video codecs can quickly remove excessive information rather than recreating the entire scene in each frame.

Spatial compression

Another strategy for tossing out superfluous information is spatial compression. This involves a process of compressing the keyframes themselves by eliminating duplicate pixels in the same image. Consider the previous example. If the newscaster is presenting in front of a solid green backdrop, it’s not necessary to encode all of the green pixels. Instead, the encoder would only transmit the differences between one group of pixels and the subsequent group.

Spatial compression enables video encoders to effectively reduce the redundant information within each frame, resulting in smaller file sizes without significant loss in perceived video quality. It plays a vital role in modern video codecs like H.264 (AVC), H.265 (HEVC), AV1, and VP9, enabling efficient video encoding and transmission for various applications, including streaming, broadcasting, video conferencing, and storage.

Hardware vs. software encoding

As noted above, encoding can occur within a browser or mobile app, on an IP camera, using software, or via a stand-alone appliance. Dedicated software and hardware encoders make the encoding process more efficient — resulting in higher throughput, reduced processing time, and improved overall performance. They also offer more advanced configurations for precise control over the encoding parameters. This enables content creators and streaming providers to optimize the video quality, bitrate, resolution, and other aspects to meet their specific requirements and deliver the best possible viewing experience.

Dedicated hardware used to be the way to go for video encoding, but plenty of easy-to-use and cost-effective software options exist today. Popular software options for live streaming include vMix, Wirecast, and the free-to-use OBS Studio. On the hardware side of things, Videon, AJA, Matrox, Osprey, and countless other appliance vendors offer purpose-built solutions for professional live broadcasting.

When it comes to VOD encoding, FFmpeg is a popular software option. Vendors like Harmonic and Telestream also offer hardware options.

The decision between software and hardware encoding often comes down to existing resources, budget, and the need for any advanced configurations or features. Plenty of producers elect to use a mix of software and hardware encoding solutions for their unique workflows. The chart below highlights the pros and cons of each option.

| Software | Hardware |

|---|---|

| Cost effective and sometimes free | Can get pricey |

| Runs on your computer | Physical appliance |

| Accessible and versatile | More robust and reliable |

| Slower encoding times | Can encode quickly and in high quality |

| Power is dependent on your computing resources | Acts as a dedicated resource for encoding workload |

| Eliminates the need for additional equipment | Frees up computing resources |

| Best for simple broadcasts and user-generated content (UGC) | Best for complex productions and live television or cable studio setups |

Check out our comprehensive guide to The 20 Best Live Streaming Encoders for an in-depth comparison of the leading software and hardware encoders available.

What are the most common encoding formats?

Encoding formats is a vague term. That’s because a compressed video is ‘formatted’ in three ways:

- By the video codec that acts upon it to condense the data. Examples of popular codecs include MPEG-2, H.264/AVC, H.265/HEVC, and AV1.

- By the video container that packages it all up. Examples of popular video container formats include MP4, MOV, and MPEG-TS.

- By the streaming protocol that facilitates delivery. Examples of popular protocols include HLS, RTMP, and DASH.

Here’s a closer look at all three.

What are video codecs?

A video codec is a software or hardware algorithm that compresses and decompresses digital video data. It determines how the video is encoded (compressed) and decoded (decompressed). Different video codecs employ various compression methods, such as removing information undetectable by the human eye, exploiting spatial and temporal redundancies, and applying transformation techniques.

One popular codec you’re sure to know by name is MP3 (or MPEG-1 Audio Layer III for those who like their acronyms spelled out). As an audio codec, rather than a video codec, it plays a role in sound compression. I bring it up because it demonstrates how impactful codecs are on media consumption trends.

The MP3 codec revolutionized the music industry in the 1990s by making giant audio libraries portable for the first time. Music lovers swapped out stacks of CDs for hand-held MP3 players that stored the same amount of music without any noticeable change in audio quality. The MP3 codec did this by discarding all audio components beyond the limitations of human hearing for efficient transportation and storage.

Streaming requires the use of both audio and video codecs, which act upon the auditory and visual data independently. This is where video container formats enter the picture.

What are video containers?

A video container format, also known as a multimedia container or file format, is a file structure that wraps audio codecs, video codecs, metadata, subtitles, and other multimedia components into a single package. The container format defines the structure and organization of the data within the file, including synchronization, timecodes, and metadata. It doesn’t directly impact the compression of the video itself, but rather provides a framework for storing and delivering the compressed video and associated audio and metadata.

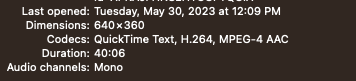

MP4 is a common container format that most know by name due to its compatibility across devices, websites, and social media platforms. Chances are, you have several MP4 files saved to your computer that encapsulate audio and video codecs, preview images, and additional metadata.

Here’s the contents of an MP4 container saved to my own computer:

As you can see, this specific MP4 file contains the H.264 video codec and the AAC audio codec, as well as metadata about the video duration. Protocols like HLS and DASH support the delivery of MP4 files for streaming, which brings me to our third category.

What are streaming protocols?

A video streaming protocol is a set of rules governing how video data is transmitted over a network. It defines the communication and data transfer protocols required for streaming video content to playback devices.

Each time you watch an on-demand video or live stream, video streaming protocols are used to deliver the data from a server to your device. They handle tasks like data segmentation, error correction, buffering, and synchronization. Examples of video streaming protocols include HTTP-based protocols like HLS and DASH, as well as video contribution technologies like RTSP, RTMP, and SRT for live streaming.

Different protocols require different codecs, so you’ll want to consider your intended video delivery technology when encoding your video.

Codecs, containers, and protocols summarized:

A video codec handles the compression and decompression of video data; a video container format organizes and packages the compressed video, audio, and metadata into a single file; and a video streaming protocol governs the transmission and delivery of video content over a network. Each component plays a distinct role in the overall process of encoding, packaging, and streaming video content.

But what if you need to package your video into multiple different formats to ensure broad compatibility and optimize distribution? No problem. Video publishers often process and repackage streaming content after it’s initially encoded using a transcoding service like Bitmovin.

What is transcoding?

Transcoding involves taking a compressed stream, decompressing and reprocessing the content, and then encoding it once more for delivery to end users. This step always occurs after video content has first been encoded, and sometimes doesn’t occur at all. Unlike encoding, it employs a digital-to-digital conversion process that’s more focused on altering the content than compressing it.

A primary reason for transcoding live videos is to repackage RTMP-encoded streams for delivery via HTTP-based protocols like Apple’s HLS. This is vital because RTMP is no longer supported by end-user devices or players, making transcoding a critical step in the video delivery chain.

When it comes to VOD, transcoding is used to change mezzanine formats like XDCAM or ProRes into a streamable format. These formats are proprietary and not supported by end-user devices. By transcoding the mezzanine formats into streamable formats like MP4 or HLS, the content becomes broadly accessible.

Transcoding is also done to break videos up into multiple bitrate and resolution renditions for adaptive bitrate delivery. This ensures smooth playback in the highest quality possible across a wide range of devices.

Here’s a look at the different processes that fall under the transcoding umbrella:

Standard transcoding

In most video streaming workflows, the files must be converted into multiple versions to ensure smooth playback on any device. Broadcasters often elect to segment these streams for adaptive bitrate delivery using an HTTP-based protocol like HLS or DASH. That way, viewers can access the content across their mobile devices and smart TVs, without having to worry about whether the encoded video content is optimized for their screen size and internet speed.

Transrating

Transrating is a subcategory of transcoding that involves changing the bitrate to accommodate different connection speeds. With pure transcoding, the video content, format, and codec would remain unaltered. Only the bitrate would change. An example of this would be shrinking a 9Mbps stream down to 5Mbps.

Transsizing

Also under the transcoding umbrella, transsizing takes place when content distributors resize the video frame to accommodate different resolution requirements. For instance, taking a 4K stream and scaling it down to 1080p would be an example of transizing. This would also result in bitrate reductions, which is why overlap between all of these terms is common.

So why do we need the extra step of transcoding, when many of these processes could be accomplished during encoding? It comes down to efficiency and scalability.

Most content distributors prefer to encode a master file (or mezzanine file) up front and then rework it as needed. The purpose of a mezzanine file is to provide a high-fidelity source for generating different versions of the content optimized for specific delivery platforms or devices. Mezzanine files serve as the basis for creating multiple versions with different bitrates, resolutions, or codecs for large-scale distribution. Transcoding also enables broadcasters to tackle the more computationally-intensive tasks in the cloud rather than doing all of their video processing on premises.

Transcoding can be done using an encoding solution like Bitmovin, a streaming platform like YouTube that has transcoding technology built into its infrastructure, or an on-premises streaming server.

Video encoding vs. transcoding: What’s the difference?

The terms transcoding and encoding are often conflated. We’ve even used the two interchangeably here at Bitmovin. But the primary differences are as follows:

- Encoding is an analog-to-digital conversion; transcoding is a digital-to-digital conversion

- Encoding is necessary to stream video content; transcoding isn’t always required.

- Encoding occurs directly after video content is captured; transcoding doesn’t occur until later when the content has been transmitted to a streaming server or cloud-based service.

To reuse an analogy from a previous post, the difference between encoding and transcoding is similar to the way crude oil is processed in the gasoline supply chain:

- First, crude oil is extracted from underground reservoirs. This crude oil can be thought of as the RAW video source itself.

- Next, the crude oil is refined into gasoline for bulk transport via pipelines and barges. This is the encoding stage, where the video source is distilled to its essence for efficient transmission.

- Finally, the gasoline is blended with ethanol and distributed to multiple destinations via tanker trucks. This represents the transcoding step, where the content is altered and packaged for end-user delivery.

Live transcoding vs. VOD transcoding

Another reason for the overlap in the usage of these two terms has to do with the nuance between live and video-on-demand (VOD) transcoding.

VOD transcoding involves processing pre-existing video files — such as movies, TV shows, or recorded events — and transforming them into suitable formats and bitrates for efficient storage and delivery. This type of video processing can be performed at any time, independently of the actual playback, allowing for more extensive processing and optimization.

Live transcoding, on the other hand, involves processing live data that’s in flight. It occurs immediately after the video is captured and moments before the video is viewed. Timing is everything in live streaming workflows, and all of the steps must take place in concert. For this reason, the nuance between ‘encoding’ and ‘transcoding’ is more pronounced when discussing live streaming workflows.

How is video transcoded?

Video transcoding is a multi-step process:

- Decoding: The encoded stream is decoded using the video codec.

- Processing: The uncompressed video file is edited and processed if needed. This can include resizing to different resolutions, changing the aspect ratio, adjusting the frame rate, or applying video effects.

- Encoding: The altered video is re-encoded, potentially using different settings and/or codecs than those with which it was initially encoded.

- Packaging: The transcoded stream is then packaged in a container format suitable for storage or delivery. The container format encapsulates the encoded video and audio streams along with necessary metadata, synchronization information, subtitles, and other supplemental data.

As mentioned above, this resource-intensive process requires significant computational power and time. For this reason, it’s important to consider your existing resources and where you want to tackle this part of the video streaming pipeline.

Where is video transcoding deployed?

Transcoders come in two flavors: on-premises transcoding servers or cloud-based services like Bitmovin. Here’s a look at the different deployment models we see developers using.

On-premises transcoding

Some video publishers choose to purchase transcoding servers or deploy transcoding software in their on-premises environments. This route puts the onus on them to set up and maintain equipment — yielding additional security but also requiring more legwork to architect the streaming workflow across multiple vendors. On-premises transcoding is only a viable option for organizations with enough resources to manage every aspect of their technology stack.

When going with on-premises deployment, you’ll want to overprovision computing resources to prepare for any unpredictable spikes in viewership. Many companies that experienced surging demand during the pandemic switched to cloud-based transcoding solutions for this reason.

Lift-and-shift cloud transcoding

Some content distributors host their transcoding software in the cloud via a lift-and-shift model. This occurs when organizations rehost their streaming infrastructure in a public or private cloud platform without optimizing their applications for the new environment. Although lift-and-shift deployments ease the burden of equipment maintenance and improve scalability, they fail to fully deliver on the promise of the cloud.

Cloud-native transcoding

“Cloud native” describes any applications that take full advantage of cloud computing. This can be delivered via software as a services (SaaS) offerings like the Bitmovin aVideo Encoder or it can be built in house.With cloud-native transcoding, developers benefit from the most flexible and scalable streaming infrastructure possible. This is the most cost- and energy-efficient of all three deployment models, making it a more sustainable approach to streaming.

According to Amazon Web Services (AWS):

“Cloud native is the software approach of building, deploying, and managing modern applications in cloud computing environments. Modern companies want to build highly scalable, flexible, and resilient applications that they can update quickly to meet customer demands. To do so, they use modern tools and techniques that inherently support application development on cloud infrastructure. These cloud-based technologies allow for quick and frequent changes to applications with no impact on service, giving companies an advantage.”

Source: Amazon Web Services (AWS)

Beyond offering lower capital expenditures for hardware, software, and operating costs, cloud-native transcoding makes it easy to scale. Video encoding expert Jan Ozer weighs in:

“Two types of companies should consider building their own encoding facilities. At the top end are companies like Netflix, YouTube, and others, for which the ability to encode at high quality, high capacity, or both delivers a clear, competitive advantage. These companies have and need to continue to innovate on the encoding front, and you can do that best if you control the entire pipeline.

At the other end are small companies with relatively straightforward needs, in which anyone with a little time on their hands can create a script for encoding and packaging files for distribution… Otherwise, for high-volume and/or complex needs, you’re almost always better off going with a commercial software program or cloud encoder.”

Source: Jan Ozer

It’s worth adding that with cloud-based deployment, you’ll never have to worry about peaks and valleys in usage or spinning up new servers. Instead, you can offload management duties and maintenance costs to your service provider while benefiting from the built-in redundancy and limitless flexibility of the cloud.

Bitmovin’s solution is based on Kubernetes and Docker to deliver on the cloud’s promise of infinite scalability and flexibility. It can be deployed in customer-owned accounts or as a managed SaaS solution using AWS, Azure, and/or Google Cloud Platform.

Considerations when architecting your encoding pipeline

When architecting the encoding pipeline of your digital video infrastructure, you’ll want to consider your requirements:

- Format and codec support: Verify that the products and services you select can support the input and output formats required.

- Output quality: Look for solutions that offer high-quality encoding with minimal loss or degradation. Consider factors such as bitrate control, support for advanced video codecs (e.g., H.264, HEVC), and the ability to handle various resolutions and frame rates.

- Scalability and performance: Confirm that your encoding and transcoding solution can efficiently handle the scale of your broadcasts. Cloud-based solutions offer an advantage here.

- Security and content protection: If you’re broadcasting sensitive or copyrighted content, you’ll want to look for digital rights management (DRM) support, watermarking, encryption, and the like.

- APIs and integration: Look for solutions that offer comprehensive API documentation, SDKs, and support for popular programming languages to ensure seamless integration with your existing workflows.

- Per-title encoding: Make sure the encoding/transcoding solution you choose offers per-title capabilities — meaning that the encoding ladder is customized to the complexity of each video. With per-title encoding, you’re able to combine high-quality viewing experiences with efficient data usage by automatically analyzing and optimizing the adaptive bitrate ladder on a case-by-case basis. After all, each video file is unique. So you risk wasting bandwidth or compromising quality without per-title encoding.

Top encoding software and hardware

In our recent guide to the 20 Best Live Streaming Encoders, we compared the industry-leading software and hardware encoding solutions.

OBS, Wirecast, and vMix are the most popular options on the software front. These solutions range from free to upwards of a thousand dollars (for a lifetime license).

A much broader selection of hardware encoders are available, with many being designed for specific use cases like enterprise collaboration or remote live video production. They can range from specialized component tools to out-of-the-box studio production kits. And while many hardware encoders help integrate all of your equipment into a full-functioning studio, you’ll want to ensure that the appliance you choose is compatible with your current gear.

Software Encoders

- OBS: Free, open-source encoding software for Windows, Mac, and Linux.

- Wirecast: Highly customizable professional encoding software for Mac and Windows.

- VMix: Easy-to-use encoding software for Windows only.

Hardware Encoders

- Videon EdgeCaster EZ Encoder: Portable encoding appliance with cloud functionality, as well as both 4K and ultra-low-latency support.

- AJA HELO Plus: Compact live streaming encoder with support for SRT contribution.

- Matrox Monarch HD: Rack-mountable encoding appliance that supports simultaneous recording.

- Osprey Talon 4K: Purpose-built 4K encoding with broad protocol support.

- VCS NSCaster-X1: Encoding touchscreen tablet that acts as a complete live production system.

- Haivision Makito X and X4: Award-winning encoder that ensures reliable low-latency streaming with SRT video contribution.

- TASCAM VS-R264: No-frills live streaming encoder designed for YouTube streaming.

- Datavideo NVS-40: Multi-channel streaming encoder that can be used for end-user delivery via HLS.

- Magwell Ultra Encode: Affordable and complete encoding appliance for video production, contribution, and monitoring.

- Blackmagic ATEM Mini: Affordable and portable option for on-the-go encoding and multi-camera setups.

- Black Box HDMI-over-IP H.264 Encoder: Straightforward H.264 encoder for delivering media over IP networks.

- Orivision H.265 1080p HDMI Encoder: Low-cost option for remote video transmission with support for SRT, HLS, and more.

- Axis: M71 Video Encoder: IP-based video surveillance encoder with PTZ controls and built-in analytics.

- LiveU Solo: Portable appliance built to deliver reliable 4K video over bonded 4G and 5G.

- YoloLive: One-stop encoder, video switcher, recorder, and monitor that eliminates the need for additional equipment.

- Pearl Nano: Live video production hardware designed for small-scale events.

- Kiloview Encoders: Affordable H.264 encoder with support for SRT, HLS, and Onvif.

Check out the full comparison here for a deep dive into each option.

Top transcoding services

When it comes to transcoding, there are also a handful of open-source and free software options. These include:

- FFmpeg: A free command tool for converting streaming video and audio.

- HandBrake: Another transcoder originally designed for ripping DVDs.

- VLC media player: A media player that supports video transcoding across various protocols.

For professional large-scale broadcasting, though, we’d recommend using a cloud-based streaming service. You’ll want to search for something that offers the security capabilities, APIs, per-title capabilities, and codec support required for large-scale video distribution.

Robust solutions like Bitmovin integrate powerful features at every workflow stage and can be used for both live and VOD streaming. This means you’re able to simplify your video infrastructure without compromising quality and efficiency.

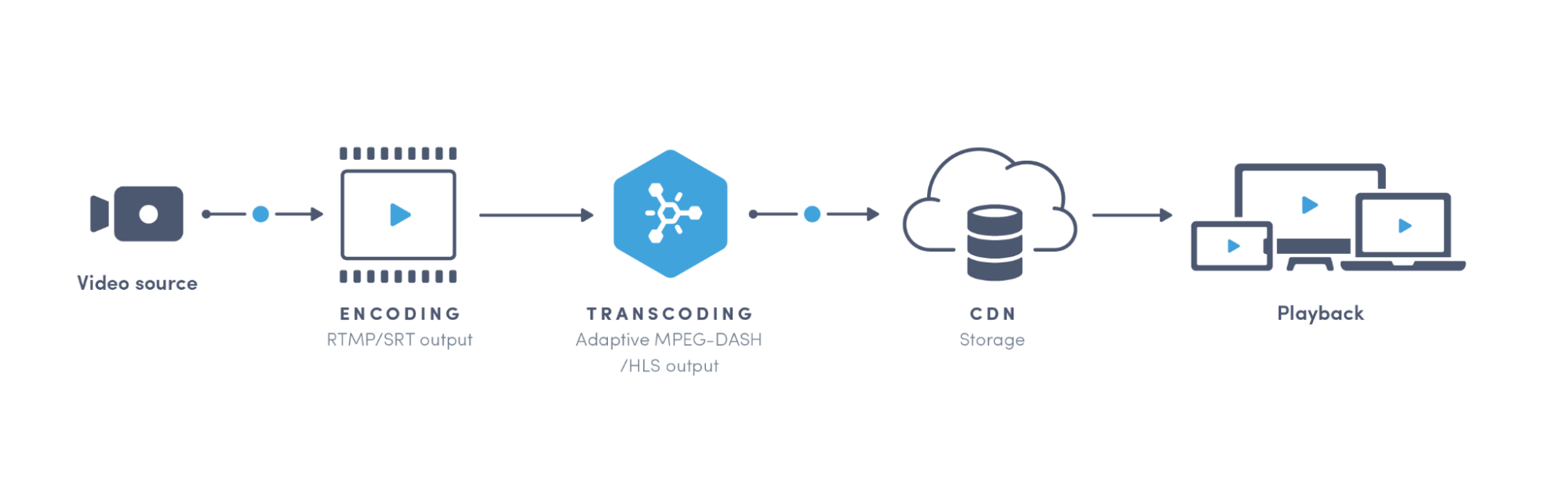

We mention this because even the most simplistic streaming workflows include four distinct steps:

- Video source or origin: Whether your source is a live camera or a cloud storage solution that houses your input files, the video origin is where it all begins.

- Encoding pipeline: The encoding pipeline comprises all of your encoding and transcoding systems, hardware, and software. For complex live streaming workflows, this often includes a blend of software and hardware encoding technologies, as well as a cloud-based transcoding service like Bitmovin.

- Content delivery network (CDN): These systems of geographically distributed servers are essential when delivering content to large global audiences, ensuring that the video is retrieved when your viewers push play.

- HTML5 Player: Players refer to the media software application that allows viewers to watch streaming content online without additional plugins. These ensure video compatibility across various browsers, operating systems, and devices. They also provide standard playback control, captions, and dynamic switching between ABR renditions.

Security, analytics, and other needs often further complicate these pipelines. For that reason, you’ll want to approach the entire workflow holistically, and look for solutions that can be easily integrated with others or consolidate multiple steps into a single solution.

We launched Streams in 2022 to help simplify streaming, which serves as a single platform for transcoding, CDN delivery, video playback, analytics, security, and more. This type of solution doesn’t compare apples-to-apples with standalone transcoders like FFmpeg — and that’s by design. As an all-in-one solution that’s built for the cloud, it eliminates the complexity of building your streaming infrastructure in-house.

Best video codecs

Once you’ve landed on your encoding and transcoding solutions, you’ll want to consider which video codecs are best suited for your use case. Most video developers use a variety of codecs to ensure compatibility across devices while also benefiting from the improvements in compression efficiency and quality offered by next-generation technologies.

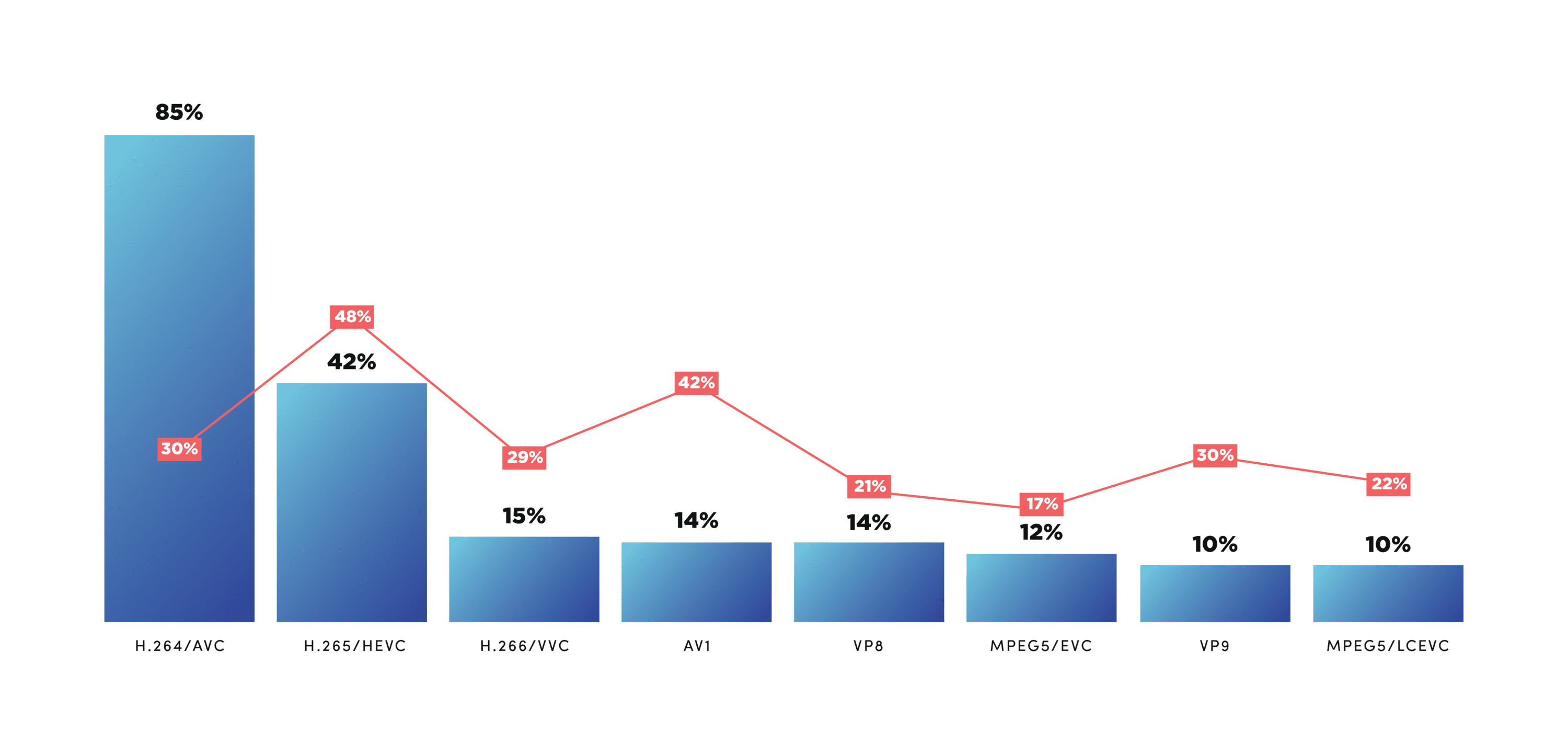

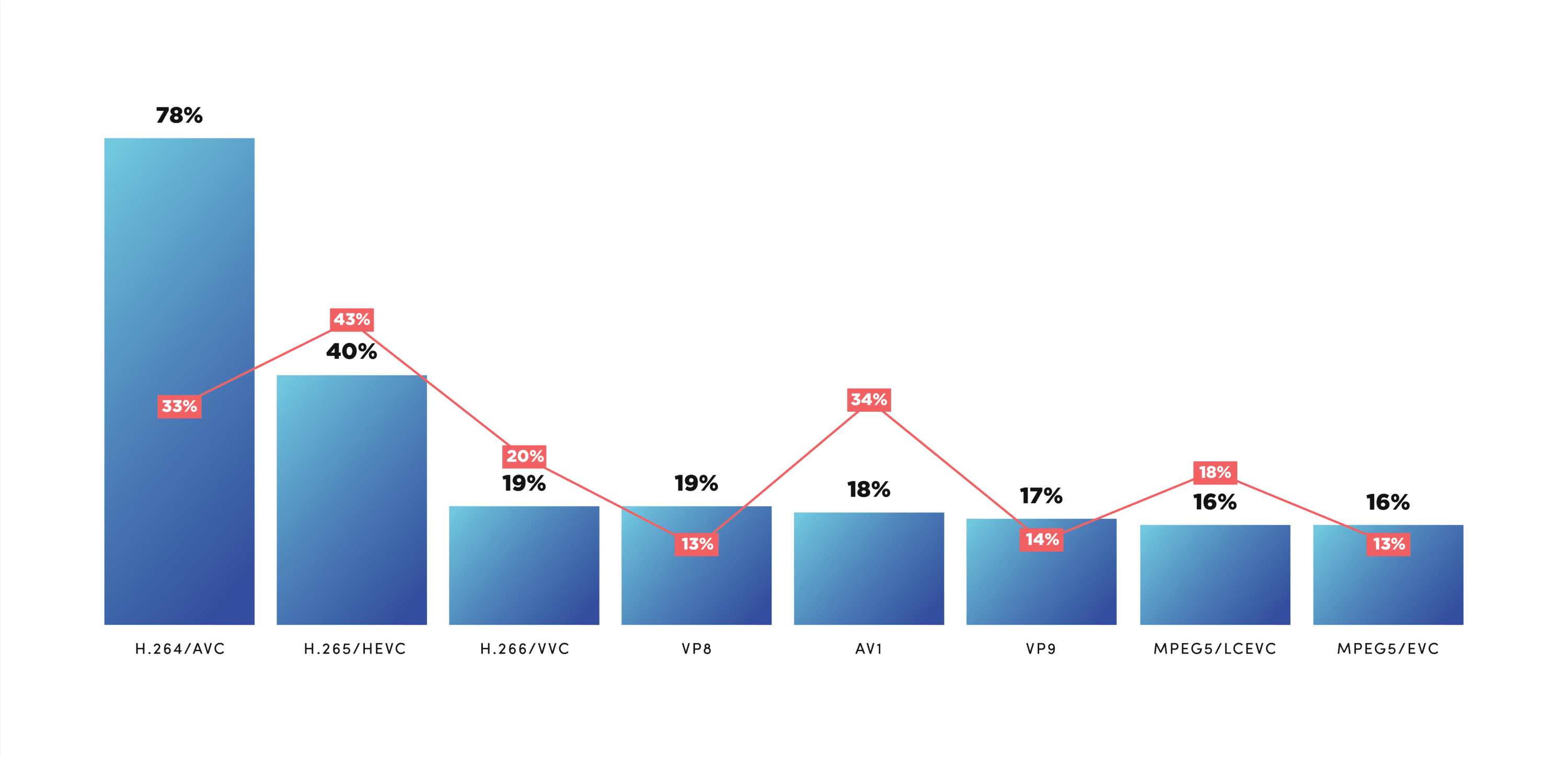

In our annual Video Developer Report, we consistently see six codecs playing a role in both live and VOD streaming workflows.

H.264/AVC

The majority of digital video takes the form of H.264/AVC (Advanced Video Coding) because it’s unparalleled in terms of device reach. As an efficient and well-supported compression technology, it lends especially well to low-latency workflows.

H.265/HEVC

HEVC encoding has been on the rise — a trend that we expect will continue since Google added support in Chrome late last year. It’s poised to become a common technology for browser-based video streaming as well as premium OTT content delivery to living room devices.

H.266/VVC

As one of the newest video codecs out there, VVC (Versatile Video Coding) usage has been lagging due to limited playback implementations from device makers. That’s all changing in 2023 (with LG’s 8K smart TVs already adding support), making VVC a good candidate for 8K and immersive 360° video content.

AV1

This open-source, royalty-free alternative to HEVC was created by the Alliance for Open Media, made up of Amazon, Netflix, Google, Microsoft, Cisco, and — of course — Bitmovin. It’s 30% more efficient than HEVC and VP9, which drastically cuts bandwidth and delivery costs.

VP8

The VP8 codec is another open and royalty-free compression format that’s used primarily for WebRTC streaming. Many developers architecting WebRTC workflows are shifting their focus to VP8, so we anticipate a gradual decline in the coming years.

VP9

VP9 is a well-supported video codec that’s suitable for both low-latency streaming and 4K. More than 90% of Chrome-encoded WebRTC videos take the form of VP9 or its predecessor VP8, and top TV brands like Samsung, Sony, LG, and Roku also support it.

We’ve been monitoring the adoption of these codecs for six years running by surveying video developers across the globe. Our CEO Stefan Lederer summarized this year’s findings as follows:

“H.264 remains the most popular codec among video developers, which is likely due to its more widespread browser and device support. Yet, when we look at the codecs developers plan to use in the short-term future, H.265/HEVC and AV1 are the two most popular codecs for live and VOD encoding. Personally, I am particularly excited to see the growing popularity of AV1, which has been boosted by more companies introducing support for it.”

– Stefan Lederer (CEO, Bitmovin)

Source: Video Developer Report

The good news is that Bitmovin’s transcoding service supports all of these codecs — giving you the flexibility to pick and choose based on your needs. We’re also committed to driving cutting-edge encoding technologies forward, so that our customers can adapt as the industry evolves.

Video quality vs. video resolution

High-resolution streams are often high-quality streams, but it’s not a guarantee. That’s because video quality is determined by several other factors such as frame rate, buffering, and pixelation.

Here’s how the two compare:

Resolution

Resolution describes the number of pixels displayed on a screen. The more pixels there are, the more stunning and crisp the picture. Nearly 4,000 pixels go across the width of a screen displaying 4K video, whereas 1080 pixels fit horizontally across a screen displaying 1080p content. Think of it as the difference between a paint-by-number kit and the original masterpiece you’re trying to recreate. Using more pixels allows the encoded file to maintain more detail in each individual frame.

Video quality

Video quality is a broader and less scientific measurement. It’s impacted by the resolution, for sure, as well as the frame rate, keyframe interval, audio quality, color accuracy, and more. Remember that Game of Thrones episode that was too dark for fans to make out what was happening? The episode’s cinematographer Fabian Wagner said that HBO’s compression technology was to blame for the pixelation and muddy colors. In this case, even 8K streams of the episode wouldn’t have yielded improvements in video quality.

TL;DR: When it comes down to it, video quality is subjective and can be influenced by a multitude of factors; whereas video resolution is a cut-and-dry measurement of the number of pixels displayed.

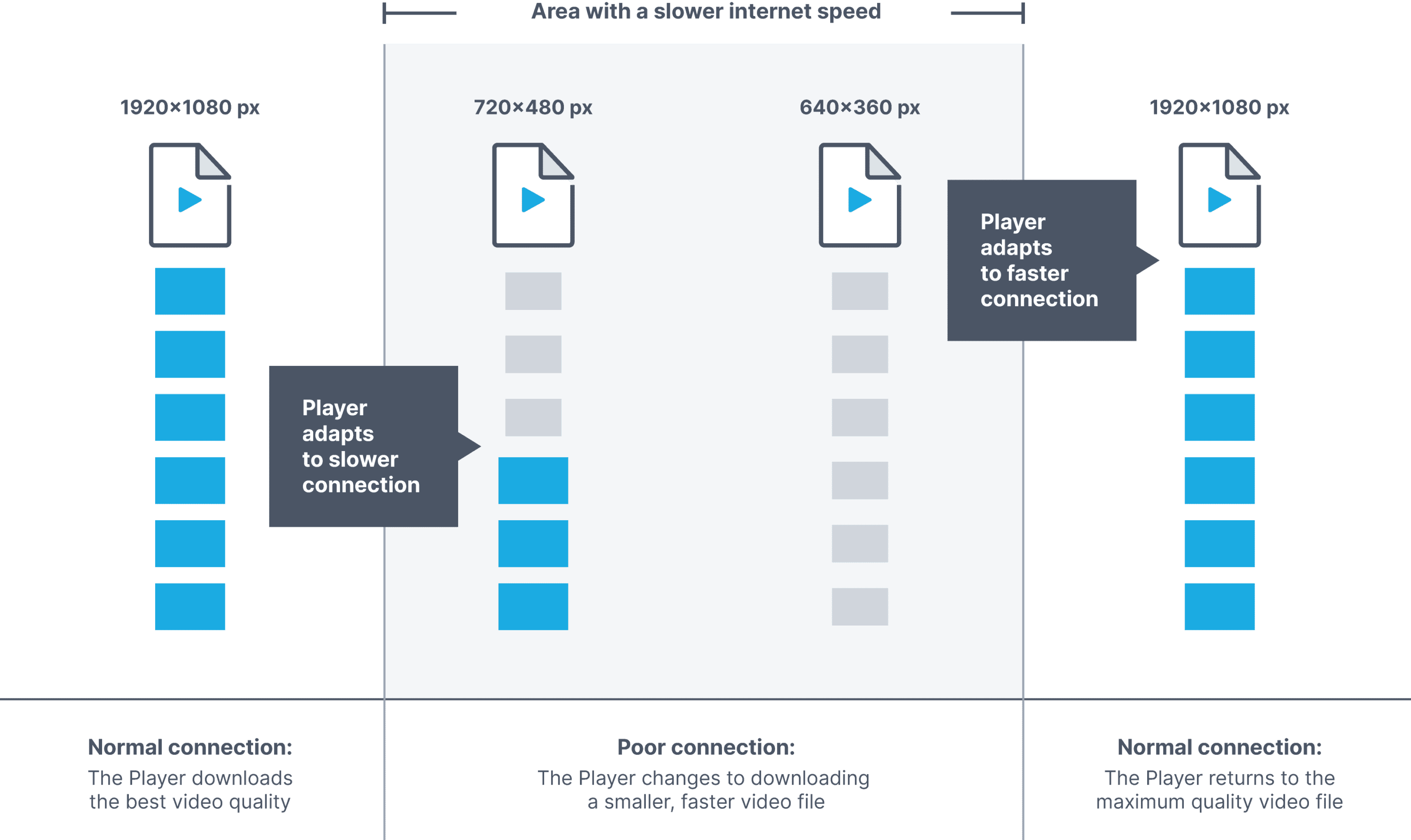

What is adaptive bitrate streaming?

The majority of video traffic today is delivered via adaptive bitrate streaming. If you’ve ever noticed a digital video change from fuzzy to sharp in a matter of seconds, you’re familiar with how it works.

Called ABR for short, adaptive bitrate streaming provides the best video quality and experience possible — no matter the connection, software, or device. It does so by enabling video streams to dynamically adapt to the screen and internet speed of each individual viewer.

Broadcasters distributing content via ABR use transcoding solutions like Bitmovin to create multiple renditions of each stream. These renditions fall on an encoding ladder, with high-bitrate, high-resolution streams at the top for viewers with high-tech setups, and low-quality, low-resolution encodings at the bottom for viewers with small screens and poor service.

The transcoder breaks each of these renditions into chunks that are approximately 4 seconds in length, which allows the player to dynamically shift between the different chunks depending on available resources.

The video player can then use whichever rendition is best suited for its display, processing power, and connectivity. Even better, if the viewer’s power and connectivity change mid-stream, the video automatically adjusts to another step on the ladder.

How to do multi-bitrate video encoding with Bitmovin

Encoding (or transcoding) your streams into a multi-bitrate ladder is required for ABR delivery. In some cases, this can be done as the RAW file is being encoded, but many broadcasters and video engineers opt to transcode the content into multiple bitrate options later in the video workflow. For this, you’ll need a live or VOD transcoding solution like Bitmovin.

Multi-bitrate video encoding, a.k.a. adaptive bitrate streaming, comes standard when processing videos with Bitmovin. Our platform can also easily be configured for per-title encoding using the Per-Title Template.

Here’s a look at the steps involved:

- Create an encoding in our API using version v1.53.0 or higher.

- Add a stream or codec configuration by providing information about which video stream of your input file will be used.

- Add muxings by defining the desired output format (whether fragmented MP4, MPEG-TS, etc.), as well as the segment length, streams to be used, and outputs.

- Start the per-title encoding using the

start EncodingAPI call. This can be configured for standard, two-pass, or three-pass encoding to achieve desired quality and bandwidth savings.

Per-Title automatically prepares content for adaptive streaming in the most optimal way. Use our Per-Title Encoding Tool (Bitmovin login required) with your own content to get a good overview of what Per-Title can do for you.

Common video encoding challenges

Ensuring playback support for the codecs you’re using

As shown in Jan Ozer’s Codec Compatibility chart below, different codecs are compatible with different devices. If you’re only encoding content for playback on iOS devices and Smart TVs, for instance, AV1 wouldn’t be the right fit. This is why it’s useful to leverage a video infrastructure solution like Bitmovin that can provide insight into which codecs are compatible with your viewers’ devices using analytics.

| Codec Compatibility | Browser | Mobile | Smart TV/OTT |

| H.264 | Virtually all | All | All |

| VP9 | Virtually all | Android, iOS | Most |

| HEVC | Very little | Android, iOS | All |

| AV1 | Edge, Firefox, Chrome, Opera | Android | Nascent |

Solving for limited user bandwidth

Today’s viewers expect the same video experience on their mobile devices as they do on their Ethernet-connected Smart TVs. Adaptive bitrate delivery is crucial, with technologies like per-title encoding yielding additional opportunities to reduce bandwidth while still exceeding your audience’s expectations.

Justifying the costs of next-gen codecs

Emerging codecs are often computationally intensive (and expensive) to encode in real time. For this reason, you’ll want to make sure that a given video asset is worth this investment. While viral content will benefit from more advanced and efficient codecs, standard assets don’t always warrant this level of tech. A great way to weigh the benefits is by using analytics to determine what devices your audience is using, how many people are tuning in, and how your video performance compares to industry benchmarks. Another way, in respect to the AV1 codec is to use our break even calculator to estimate the number of views it takes to justify the cost of using AV1 in addition to H.264 or H.265.

Ensuring low latency for live broadcasts

Many live and interactive broadcasts like live sports, e-commerce, online learning, and esports require sub-ten second delivery. For these, you’ll want to use a video codec like H.264 that’s optimized for low-latency streaming. We’d also recommend finding a live transcoding solution that accepts emerging low-latency protocols like SRT and RIST.

Streaming video encoding glossary

Application-Specific Integrated Circuits (ASICs)

ASICS for video encoding are purpose-built video processing circuits designed to optimize performance, power consumption, and costs. Because they are manufactured for the specific application of video encoding and transcoding, ASICs can achieve high throughput and superior performance compared to general-purpose processors like CPUs and GPUs.

Bitrate

Bitrate refers to the amount of data transmitted in a given amount of time, measured in bits per second (bps). Higher bitrate streams include more data in the form of pixels, frames, and the like — resulting in higher quality. That said, they require more bandwidth for transmission and storage. On the other end of the spectrum, low-bitrate streams can be more easily viewed by users with poor internet connections, but quality suffers as a result of greater file compression.

Frame rate

Frame rate refers to the number of individual frames displayed in a video, measured in frames per second (fps). This determines the temporal smoothness and fluidity of motion in a video. Higher frame rate results in smoother motion, while lower frame rates introduce perceived choppiness or jerkiness. Common frame rates in video production and streaming include 24 fps (film standard), 30 fps (broadcast standard), and 60 fps (smooth motion and gaming). The appropriate frame rate selection depends on the content type, target platform, and desired viewing experience.

Graphic Processing Units (GPUs)

GPUs are hardware components designed to handle complex graphics computations and parallel processing tasks. Originally developed for accelerating graphics rendering in gaming and multimedia applications, GPUs have evolved into powerful processors capable of performing general-purpose computing tasks. They consist of thousands of cores that can execute multiple instructions simultaneously, making them highly efficient for parallelizable workloads. GPU-based video encoding solutions are both flexible and widely available, but they aren’t as specialized as application-specific integrated circuits (ASICs), defined above.

Group of pictures (GOP)

Also called the keyframe interval, a GOP is the distance between two keyframes measured by the total number of frames it contains. A shorter GOP means more frequent keyframes, which can enhance quality for fast-paced scenes but will result in a larger file size. A longer GOP means there are fewer keyframes in the encoding, which leads to encoding efficiency and decreased file size but could degrade video quality. Another consideration in determining the keyframe interval is the tendency for users to skip ahead or back to random points in a video. Shorter GOPs place more keyframes throughout the video to support viewing from these random access points.

Metadata

Metadata refers to descriptive or structural information that provides additional context and details about a piece of data. In the context of video streaming, metadata refers to the supplementary information about the content, such as title, description, duration, resolution, language, genre, release date, and more. Metadata can also include technical information like encoding settings, aspect ratio, and audio format. Metadata is essential for content organization, searchability, and providing an enhanced user experience. It’s typically embedded within the video file or delivered alongside the streaming data in a standardized format, allowing for easy retrieval and interpretation by video players, search engines, and content management systems.

MKV

Matroska Video, or MKV, is a popular open-source multimedia format that can store an unlimited amount of video, audio, picture, and subtitle tracks in a single file It’s also flexible in its support for codecs, accepting H.264, VP9, AV1, and more. As such, it’s a popular format for video distribution and digital archiving.

MPEG-4

Short for Moving Picture Experts Group-4, MPEG-4 is a group of video compression standards developed by the International Organization for Standardization (ISO). Several codecs implement this standard, including H.264/AVC and AAC.

Video file

A video file, also called a video container, is a self-contained unit that holds the compressed video and audio content, as well as supporting metadata. These come in different formats, including MP4, MOV, AVI, FLV, and more. Different file formats accept different codecs and play back on different devices.

WebM

WebM is an open, royalty-free multimedia container format developed to provide a high-quality alternative to proprietary video formats. Google, Mozilla, and other leading companies developed this format, which utilizes VP8 or VP9 video codecs.

Video encoding FAQs

Does video encoding affect quality?

Yes and no. While an encoded video will always be of lower quality than the RAW file, today’s codecs are advanced enough to reduce the amount of data included without degrading quality in a way that viewers would notice.

Why do I need to encode a video?

No matter the industry or use case, encoding is a key step in the video delivery chain. It prepares the video for digital distribution and compresses the data into a more manageable size. It’s always taking place in the background for UGC applications like streaming to Twitch. And by using a professional hardware or software solution, broadcasters can tap into additional functionality.

What’s the difference between encoding with GPUs vs ASICs?

Encoding with GPUs involves different hardware architectures and approaches than encoding with ASiCs. While GPUs are programmable and versatile across applications, ASICs are purpose-built for specific tasks. This makes them expensive and less accessible. Currently, only companies like Facebook and Google as using ASICs for encoding, which provides fast turnaround times and better encoding capacity.

Why do I need to transcode a video?

If you’re serious about scaling your broadcasts to reach viewers on any device and connection speed, transcoding is vital. Most encoders support contribution protocols like RTMP and RTSP. While these protocols work great for video transmission, they’re ill-suited for end-user delivery and aren’t even supported on most viewer devices. You’ll want to transcode your videos into adaptive bitrate HLS or DASH to ensure that your stream reaches viewers and delivers the best experience possible.

How much does video encoding and transcoding cost?

True video encoding (meaning contribution encoding software and hardware) can range from cheap to expensive. And while software options like OBS are technically free, they have hidden costs associated with the computing equipment on which they’re deployed. Hardware encoders are more costly, but affordable options like the Blackmagic ATEM Mini Pro (a $295 piece of equipment) are also available.

When it comes to transcoding, you’ll want to consider both storage and egress costs to calculate the total cost of ownership (TCO). When using Bitmovin’s all-in-one platform, pricing is based on a simple fee per minute of video in each output, with rates depending on the features used. These include the chosen resolution, codecs, and use of multi-pass encoding. Our pricing can be offered as both pay-as-you-go or as a custom plan. Learn more here.

How is video encoding different from video compression?

Video encoding always involves compression, but video compression doesn’t always involve encoding. Rather, video encoding is one version of compression that involves converting RAW video data into a condensed format using video and audio codecs. Video compression can also take place during transcoding or even by compressing an MP4 on your hard drive into a lossless ZIP file.

What are the best encoding settings for quality?

The right encoding settings will always depend on your specific use case. For instance, action-packed streams of sporting events require a shorter keyframe interval than videos depicting static scenes such as talk shows. Similarly, a low frame rate works fine for surveillance footage, but wouldn’t be the right fit for sporting events.

You’ll want to strike a balance between quality and efficiency by avoiding unnecessarily high bitrates and overly complex codecs. Encoding expert Jan Ozer recommends the following:

“When you upload a file to an online video platform (OVP) or user-generated content (UGC) site, the mezzanine file you create will be transcoded into multiple ABR rungs. Given that video is a garbage-in/worse-garbage-out medium, the inclination is to encode at as high a data rate as possible. However, higher data rates increase upload time and the risk of upload failure.

It turns out that encoding a 1080p30 file above 10 Mbps delivers very little additional quality and that ProRes output may actually reduce quality as compared to a 100 Mbps H.264-encoded file.”

Source: Jan Ozer

What is the most effective way to encode/transcode a large volume of videos?

Cloud-based platforms like Bitmovin make it simple to transcode a large volume of videos with scalable, on-demand resources that can process multiple videos simultaneously. Streaming APIs and tools for integration also make it easy to automate your encoding workflow and streamline efficiencies.

What is per-title encoding?

Per-title encoding customizes the bitrate ladder of each encoded video based on complexity. This allows content distributors to find the sweet spot by dynamically identifying a bitrate that captures all of the information required to deliver a perfect viewing experience, without wasting bandwidth with unnecessary data. Rather than relying on predetermined bitrate ladders that aren’t the right fit for every type of content, per-title encoding ensures that resources are used efficiently by tailoring the encoding settings on a per-video basis.

What is H.264 video encoding?

Also referred to as Advanced Video Coding, H.264/AVC is a widely supported codec with significant penetration into streaming, cable broadcasting, and even Blu-ray disks. It plays on virtually any device and delivers quality video streams, but is gradually declining in usage due to more advanced alternatives like H.265/HEVC and AV1. We cover all of the popular encoding formats in more detail in our annual Video Developer Report.

Conclusion

Thanks to video encoding and transcoding technologies, today’s viewers have access to anywhere, anytime content delivered in a digital format. The ability to compress streaming data for efficient delivery (without sacrificing quality) is key to staying competitive in the online video market. And for that, you need to architect your video workflow using the right technologies.

Once you’ve mastered everything that goes into encoding, the next step is finding a video processing platform for multi-device delivery and transcoding. At Bitmovin, we deliver video infrastructure to broadcasters building world-class video platforms. Our live and VOD platforms can ingest streams from any of the encoders detailed above and output HLS and DASH for delivery to streaming services and end users.

Find out how you can achieve the highest quality of experience on the market and deliver unbreakable streams. Get started with a free trial today or reach out to our team of experts.

As always, we’re here to help you navigate the complex world of streaming and simplify your workflow.