Originally published at: Apple’s new Managed Media Source: Everything you need to know [2023]

At their 2023 Worldwide Developer conference, Apple announced a new Managed Media Source API. This post will explain the new functionality and improvements over prior methods that will enable more efficient video streaming and longer battery life for iOS devices. Keep reading to learn more.

- Background and the “old” MSE

- New Managed Media Source in Safari 17

- Airplay with MMS

- Migration from MSE to MMS

- Next Steps

Background and the “old” MSE

The first internet videos of the early 2000s were powered by plugins like Flash and Quicktime, separate software that needed to be installed and maintained in addition to the web browser. In 2010, HTML5 was introduced, with its <video> tag that made it possible to embed video without plugins. This was a much simpler and more flexible approach to adding video to websites, but had some limitations. Apple’s HTTP Live Streaming (HLS) made adaptive streaming possible, but developers wanted more control and flexibility than native HLS offered, like the ability to select media or play DRM-protected content. In 2013, the Media Source Extension (MSE) was published by the W3C body, providing a low-level toolkit that gave more control for managing buffering and resolution for adaptive streaming. MSE was quickly adopted by all major browsers and is now the most widely used web video technology…except for on iPhones. MSE has some inefficiencies that lead to greater power use than native HLS and Apple’s testing found that adding MSE support would have meant reducing the battery life, so all the benefits of MSE have been unavailable on iPhone…until now.

New Managed Media Source in Safari 17

With MSE, it can be difficult to achieve the same quality of playback possible with HLS, especially with lower power devices and spotty network conditions. This is partly because MSE transfers most control over the streaming of media data from the User Agent to the application running in the page. But the page doesn’t have the same level of knowledge or even goals as the User Agent, and may request media data at any time, often at the expense of higher power usage. To address those drawbacks and combine the flexibility provided by MSE with the efficiency of HLS, Apple created a new Managed Media Source API (MMS).

The new “managed” MediaSource gives the browser more control over the MediaSource and its associated objects. It makes it easier to support streaming media playback on mobile devices, while allowing User Agents to react to changes in memory usage and networking capabilities. MMS can reduce power usage by telling the webpage when it’s a good time to load more media data from the network. When nothing is requested, the cellular modem can go into a low power state for longer periods of time, increasing battery life. When the system gets into a low memory state, MMS may clear out buffered data as needed to reduce memory consumption and keep operations of the system and the app stable. MMS also tracks when buffering should start and stop, so the browser can detect low buffer and full buffer states for you. Using MMS will save your viewers bandwidth and battery life, allowing them to enjoy your videos for even longer.

Airplay with MMS

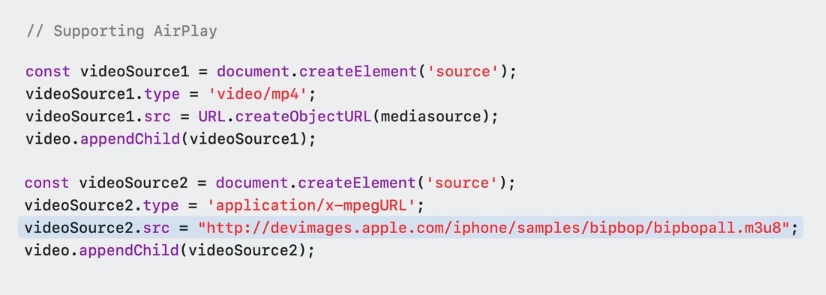

One of the great things about native HLS support in Safari is the automatic support for AirPlay that lets viewers stream video from their phone to compatible Smart TVs and set top boxes. Airplay requires a URL that you can send, but that doesn’t exist in MSE, making them incompatible. But now with MMS, you can add an HLS playlist to a child source element for the video, and when the user AirPlays your content, Safari will switch away from your Managed Media Source and play the HLS stream on the AirPlay device. It’s a slick way to get the best of both worlds.

Migration from MSE to MMS

The Managed Media Source is designed in a backwards compatible way. This means that changing the code from creating a MediaSource object to creating a ManagedMediaSource object after checking if the API is available is the first step:

function getMediaSource() { if (window.ManagedMediaSource) { return new window.ManagedMediaSource(); } if (window.MediaSource) { return new window.MediaSource(); }throw “No MediaSource API available”;

}

const mediaSource = getMediaSource();

As the MMS supports all methods the “old” MSE does, this is all to get you started, but doesn’t unleash the full power of this new API. For that, you need to handle different new events:

mediaSource.addEventListener(“startstreaming”, onStartStreamingHandler);The startstreaming event indicates that more media data should now be loaded from the network.

mediaSource.addEventListener(“endstreaming”, onStopStreamingHandler);The endstreaming event is the counterpart of startstreaming and signals that for now no more media data should be requested from the network. This status can also be checked via the streaming attribute on the MMS instance. On devices like iPhones (once fully available) and iPad, requests that follow these two hints benefit from the fast 5G network and allows the device to get into low power mode in between request batches.

In addition, the current implementation also offers hints about a preferred, suggested quality to download. The browser suggests if a high, medium, or low quality should be requested. The user agent may base this on facts like network speed, but also additional details like user settings about enabled data safer modes. This can be read from the MMS instance’s quality property and any change is signaled via an qualitychange event:

mediaSource.addEventListener(“qualitychange”, onQualityChangeHandler);It remains to be seen if the quality hint will still be available in the future as it offers some risk of fingerprinting.

As the MMS may remove any date range at any given time (as opposed to the MSE’s behavior where this could only happen during the process of appending data), it is strongly recommended to check if the data needed next is still present or needs to be re-downloaded.

Next Steps

Managed Media Source is already available in the current Safari Tech Preview on macOS and Safari 17 on iPadOS 17 beta and can be enabled as an experimental feature on iOS 17 beta. Once generally available on iOS, without being an experimental feature, this will finally bring lots of flexibility and choices to Safari, other browsers, and Apps with WebViews on iOS. It would even be possible to finally support DASH streams on iOS, while keeping web apps power efficient.

Apple has already submitted the proposal of the Managed Media Source API to the World Wide Web Consortium (W3C), which is under discussion and might lead to an open standard other browser vendors could adopt.

Bitmovin will be running technical evaluations to fully explore and understand the benefits of MMS, including how it performs in various real-world environments. We will closely follow the progress from Apple and consider introducing support for MMS into our Web Player SDK once it advances from being an experimental feature on iOS. Stay tuned!

If you’re interested in more detail, you can watch the replay of the media formats section from WWDC23 here and read the release notes for Safari 17 beta and iOS & iPadOS 17 beta. You can also check out our MSE demo code and our blog about developing a video player with structured concurrency.